Graal VM and Truffle. Proof of concept with Tiger language

GraalVM serves as a universal virtual machine designed to run applications authored in various languages including JavaScript, Python 3, Ruby, R, JVM-based languages such as Java, Scala, Kotlin, and LLVM-based languages like C and C++. By eliminating the barriers between different programming languages, it facilitates seamless interoperability within a shared runtime. It’s versatile, capable of operating either standalone or within the context of OpenJDK, Node.js, Oracle Database, or MySQL. Simply put, GraalVM enhances the Java Virtual Machine at a fundamental level[1]. Interestingly, certain benchmarks have demonstrated that a basic implementation of the JavaScript Engine using GraalVM outperforms the Google V8 Engine[2].

As part of a proof of concept to examine execution time and memory usage in interpreting a custom language, we chose to write the target codes in Tiger. This language, primarily used in academic settings, is designed to challenge students’ abilities due to its inherent complexities. However, for the sake of simplicity and to streamline the proof of concept, we made minor modifications to the language’s original specification.

Tiger language modification

To expedite the development process of the proof-of-concept, we’ve made alterations to the Tiger language specification, thereby simplifying it.

Principal modifications:

-

This implementation does not have Record types, and then, there are not Record fields or Record field access

-

There is double type support (\d+\.\d+)

- There are only three builtin functions:

-

print (print the String representation of the first argument):

1 2

print(19) > 19

1 2

print("hello world") > hello world

1 2

print(nano_time()) > ...

- nano_time (returns the System.nanoTime() Java function result)

-

wait (Sleep the current thread by x milliseconds where x is the first argument)

-

There is no type of declaration or check. All types and values are created and assigned dynamically

- There are no comment declarations

Graal VM and Truffle

The Truffle framework enhances the efficiency of running various programming languages on GraalVM. By automatically generating high-performance code from interpreters, it simplifies the process of language implementation. Truffle facilitates the straightforward conversion of custom ASTs into Graal AST nodes. Despite introducing another layer of abstraction, this API statically generates code through Java annotations, which eliminates additional code levels during the interpretation stage. Therefore, the final result consists solely of Graal code.

Types

Every language utilizes the abstraction of specific types, essentially grouping data by its characteristics. Even though some sources suggest certain languages lack types, this assertion isn’t entirely accurate. Consider the operation 2 + 2, which invariably yields the integer four - a numeric value that has been recognizable since time immemorial.

Our interpretation of Tiger includes four primitive types in the interpretation stage.

These are the types mapped using Java POO patterns definition:

- Long: to represent integers from

-(2**64 - 1)to2**64 - Double: to represent floating comma numbers with, 1 bit for sign, 11 bits for exponent and 52 bits for the fractional part.

- Function: this is a custom function object implementation

- Nil: every expression in Tiger returns to value, nil is the default one

Parsing

The parsing of Tiger script is facilitated by the ANTLR 4.0 framework, which generates a fundamental Abstract Syntax Tree (AST) to evaluate the script. While the initial approach to interpret the source code might involve visiting the obtained AST, the primary objective of this work is to explore and leverage the capabilities of Graal and Truffle.

I utilize the previously generated AST to convert it into a Truffle tree. Most compiler implementations typically involve four key stages: 1 - Tokenization, 2 - Creation of a tree based on the grammar, 3 - Semantic analysis, and 4 - Code generation. The ANTLR framework is employed to accomplish the first two stages, while the latter two stages fall within the purview of Graal and Truffle.

Variable Scope

Variable and function scopes are structured in a parent-child tree format. Each scope inherently has a parent scope. When a variable is absent in the current scope, it triggers a search in the parent scope. This process continues up the tree until the variable is located, or if no parent scope remains. In the latter case, it throws a “The variable x is not defined” exception.

1

2

3

4

5

6

7

8

| a. 1 |

| b, "hello world" | <-

|

| a, 10 |

| z, 1000|

|c, 1 |

Performance

In dynamic languages, operations such as multiplication ‘*’, division ‘/’, or a less-than ‘<’ comparison are often determined only at runtime. To achieve maximum performance, a language implementation should aim to prevent repetitive lookups for these operators with each operation.

Initial observations from my approach to variable (read, write) and function lookups indicate substantial overhead in execution time, almost ten times that of the final execution result.

Variable lookup

All variable and function arguments are stored in FrameSlots, which is the Truffle API’s method for value storage. I have implemented a strategy where every variable access (both read and write) has its address stored in its respective AST Node. This eliminates the need for traversing the Scope tree structure each time there is a read/write call. These variable context structures are constructed during the semantic analysis stage and written into the node as a Java field, allowing for O(1) access.

The Truffle API offers options for reading and writing values that can effectively circumvent the need for boxing and unboxing these values. However, when passing arguments to a function call (corresponding to the Graal RootNode class), the values must be passed as Object values. One solution to this is to minimize the number of unboxing operations. The only instances of unboxing operations occur in the ReadArg node evaluation within the AST.

Function calls

Truffle can assign function call arguments within a class named Frame, which is optimized for execution in Graal VM.

Tiger language incorporates nested functions and argument scopes. Consequently, I introduced an extra argument in the Frame object passed to a function call.

When passing arguments to a function call, I structure them as follows:

- The first argument is the current branch Frame

- Subsequent arguments consist of the evaluated expressions for the current Tiger function calle next arguments are the evaluated expressions for the current Tiger function call

1

2

3

4

5

6

7

function a(n)=

let

function b(r)=

print(r + n) // to access the n value we have to access the first argument in the b call and then the frame argument of the scope

in

b(n)

end

Polymorphic Inline

Polymorphic inline caches are mechanisms that enhance the efficiency of function and property lookup in dynamic languages. These are typically implemented utilizing assembler code and code patching techniques.

Tests

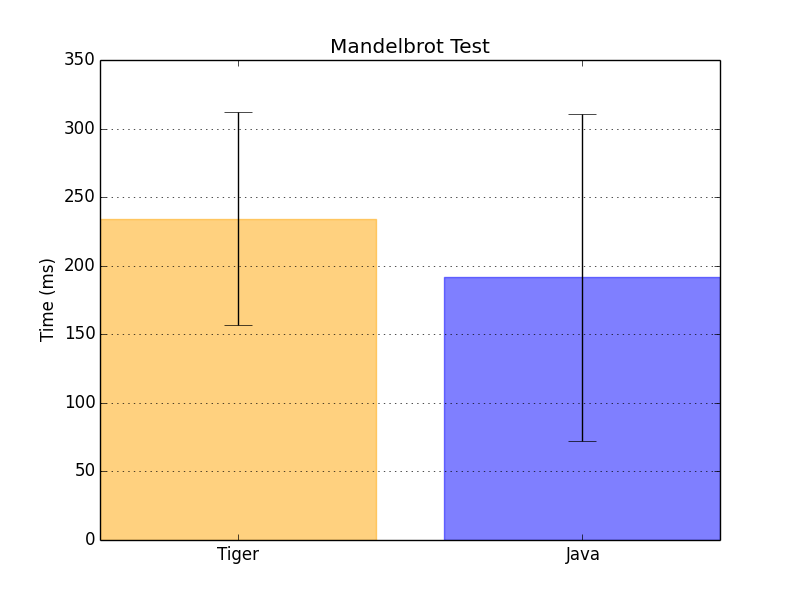

The classic Mandelbrot benchmark designed for Java was put to the test against a tailor-made Tiger Mandelbrot test.

Tests Results and remarks

The findings from this comparison are quite promising. The noticeable divergence between the mean, maximum, and minimum execution time values is attributable to Graal VM’s ability to inline function calls and cache the most frequently used nodes (like Integer constants). As a result, initial calls experience significant delays, but this is a characteristic of Java run effects, as evidenced in the above figure.

Here you can find the source code of this cool project.

Future research

- Tail call optimization:

In related works [3] the author proposes to implement a Tail Call optimization showing very good results for recursive function calls

- IGV profiling to detect operations time overloads and unexpected nodes construction