Remote buildkit agents to speed up Docker builds by 10 times

The illusion of caching in Docker builds on CI/CD runners

In most modern CI/CD setups, we package our applications into container images. When we package such applications, we build images and this happens inside ephemeral runners — short-lived containers or virtual machines that spin up for a single pipeline job and are destroyed once it finishes. This architecture scales beautifully but introduces one major performance bottleneck: loss of internal build cache.

Every new CI job starts from scratch. Docker has to pull base images again, reinstall packages, and rebuild binaries that haven’t changed in weeks. For teams building large projects, this can mean waiting 10–15 minutes for a build that could, in theory, finish in just a few seconds.

Fast image builds are critical for developer productivity and feedback loops. The quicker a build completes, the faster developers can iterate and ship changes. Faster builds also reduce compute usage and therefore cost — especially in cloud CI environments. And by shortening individual build times, we reduce queue wait time for everyone else, improving the overall efficiency and throughput of the entire CI/CD system.

At first glance, Docker’s layer caching and GitHub/GitLab cache features seem like the solution. A simple approach might be to mount a GitHub or GitLab cache folder in the runner’s filesystem and use BuildKit’s cache export/import flags:

1

2

3

docker build \

--cache-to=type=local,dest=/path/to/mounted/cache \

--cache-from=type=local,src=/path/to/mounted/cache .

That would persist the image layer cache between runs and might even help reuse a node_modules folder. Yet, it still can’t accelerate the internal BuildKit cache system that Docker relies on for cache mount reuse.

The real culprit: --mount=type=cache

The --mount=type=cache flag is what makes BuildKit more powerful.

Cache mounts let you persist intermediate files (like ~/.cache/yarn or /go/pkg/mod) between layers within a build.

But unless BuildKit itself has a persistent volume to store them, they vanish as soon as the build container stops.

The result? Every single CI run downloads the same dependencies and rebuilds the same binaries again and again. And this is why, even in 2025, most Docker builds in CI are still slower than local builds on a developer’s laptop.

Even if you use –cache-to and –cache-from, those only persist image layers, not the build-time cache mounts created by BuildKit.

For example:

1

2

3

# Dockerfile

RUN --mount=type=cache,target=/home/node/.cache/yarn \

yarn install --frozen-lockfile

BuildKit stores that cached directory in its own workspace — usually under /home/user/.local/share/buildkit in the builder filesystem.

If the runner is ephemeral (like in GitHub Actions, GitLab CI, or any cloud-based self-hosted runner), that directory disappears after the job ends.

So even though your Dockerfile says “use cache”, there’s nothing persistent to reuse between builds.

Platforms such as depot.dev appeared precisely to solve this.

They host remote BuildKit agents with persistent disk-backed caches.

Instead of building locally or inside a short-lived CI container, your build context is sent to a remote BuildKit daemon that reuses cached layers and --mount=type=cache data across builds.

The result: the same Dockerfile, the same syntax — but builds that run 5–10× faster.

Our problems

Our monorepo contains multiple services written in Node.js and Go — each with its own Dockerfile. Yet despite enabling GitLab CI’s layer caching, every pipeline run took longer than expected.

The culprit: our CI runners are ephemeral.

Each job spins up a fresh runner, resetting the BuildKit cache, and vanishing once the build finishes.

This means that cache mounts created with --mount=type=cache have nowhere persistent to live, forcing every build to download dependencies and rebuild binaries from scratch.

The solution is clear — we need persistent builders. We deploy a remote BuildKit daemon with persistent storage in our Kubernetes cluster, ensuring that cache data survives across builds and can be reused by all CI jobs.

Setting up a remote BuildKit deployment

We deploy a BuildKit daemon in our Kubernetes cluster using a Deployment and expose it via a Service of type LoadBalancer.

There is no harm on having this service exposed to the internet as long as you use mTLS to secure the communication.

Here is the Deployment and Service manifest we used:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: buildkit-agent

name: chatlayer-buildkit-buildkit-agent

namespace: buildx

spec:

selector:

matchLabels:

app: buildkit-agent

release: chatlayer-buildkit

template:

metadata:

labels:

app: buildkit-agent

release: chatlayer-buildkit

spec:

containers:

- args:

- "--addr"

- unix:///run/user/1000/buildkit/buildkitd.sock

- "--addr"

- tcp://0.0.0.0:1234

- "--tlscacert"

- /certs/ca.pem

- "--tlscert"

- /certs/cert.pem

- "--tlskey"

- /certs/key.pem

- "--oci-worker-no-process-sandbox"

image: moby/buildkit:master-rootless

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command:

- buildctl

- debug

- workers

name: buildkitd

ports:

- containerPort: 1234

name: buildkit

protocol: TCP

readinessProbe:

exec:

command:

- buildctl

- debug

- workers

resources:

limits:

cpu: "3"

memory: 8Gi

requests:

cpu: 500m

memory: 1536Mi

securityContext:

appArmorProfile:

type: Unconfined

runAsGroup: 1000

runAsUser: 1000

seccompProfile:

type: Unconfined

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /certs

name: certs

readOnly: true

- mountPath: /home/user/.config/buildkit

name: config

- mountPath: /home/user/.local/share/buildkit

name: buildkitd

dnsPolicy: ClusterFirst

initContainers:

- command:

- sh

- "-c"

- >-

rm -f /home/user/.local/share/buildkit/buildkitd.lock && chown -R

1000:1000 /home/user/.local/share/buildkit

image: busybox:latest

imagePullPolicy: IfNotPresent

name: fix-permissions

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /home/user/.local/share/buildkit

name: buildkitd

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- name: certs

secret:

defaultMode: 420

secretName: buildkit-tls

- configMap:

defaultMode: 420

name: chatlayer-buildkit-buildkit-agent-config

name: config

- name: buildkitd

persistentVolumeClaim:

claimName: chatlayer-buildkit-buildkit-agent

---

apiVersion: v1

kind: Service

metadata:

labels:

app: buildkit-agent

argocd.argoproj.io/instance: buildkit

name: chatlayer-buildkit-buildkit-agent

namespace: buildx

spec:

allocateLoadBalancerNodePorts: true

clusterIP: ...

clusterIPs:

- ...

externalTrafficPolicy: Local

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: buildkit

nodePort: 30450

port: 1234

protocol: TCP

targetPort: 1234

selector:

app: buildkit-agent

release: chatlayer-buildkit

type: LoadBalancer

Notice that a StatefulSet would be a better fit for this use case, but for simplicity we used a Deployment with a single replica. The buildkit deployment+service example served as the main inspiration for this setup. You can find it here.

Let’s dissect the important parts.

Rootless BuildKit

First, we use the moby/buildkit:master-rootless image to run the BuildKit daemon in rootless mode. We don’t want to run as root inside our cluster — that is a very basic security principle.

TLS configuration

Second, we configure the buildkit daemon to use TLS for secure communication. We mount the TLS certificates from a Kubernetes Secret and pass their paths as arguments to the buildkitd process. This ensures that all communication between the client and the BuildKit daemon is encrypted and authenticated. Thus, the same certificates will be used later in our CI pipelines to connect to the remote builder.

To generate the TLS certificates, we used OpenSSL with the following script:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

#!/usr/bin/env bash

set -euo pipefail

# === CONFIGURATION ===

SERVER_IP=${1:-"127.0.0.1"} # pass your public IP if needed (e.g., ./generate-buildkit-certs.sh 1.1.1.1)

DAYS=3650

echo "Generating BuildKit CA, Server, and Client certificates for IP: ${SERVER_IP}"

mkdir -p buildkit-certs

cd buildkit-certs

# === 1) Root CA ===

openssl genrsa -out ca.key 4096

openssl req -x509 -new -nodes -key ca.key -sha256 -days $DAYS \

-subj "/CN=BuildKit CA" -out ca.pem

# === 2) Server Certificate ===

cat > server-ext.cnf <<EOF

subjectAltName = @alt_names

basicConstraints = CA:FALSE

keyUsage = digitalSignature, keyEncipherment

extendedKeyUsage = serverAuth

[alt_names]

IP.1 = ${SERVER_IP}

IP.2 = 127.0.0.1

DNS.1 = localhost

EOF

openssl genrsa -out server-key.pem 4096

openssl req -new -key server-key.pem -subj "/CN=${SERVER_IP}" -out server.csr

openssl x509 -req -in server.csr -CA ca.pem -CAkey ca.key -CAcreateserial \

-out server-cert.pem -days $DAYS -sha256 -extfile server-ext.cnf

# === 3) Client Certificate ===

cat > client-ext.cnf <<EOF

basicConstraints = CA:FALSE

keyUsage = digitalSignature, keyEncipherment

extendedKeyUsage = clientAuth

EOF

openssl genrsa -out client-key.pem 4096

openssl req -new -key client-key.pem -subj "/CN=BuildKit Client" -out client.csr

openssl x509 -req -in client.csr -CA ca.pem -CAkey ca.key -CAcreateserial \

-out client-cert.pem -days $DAYS -sha256 -extfile client-ext.cnf

# === 4) Clean up CSRs and temp files ===

rm -f *.csr *.cnf *.srl

echo

echo "✅ Certificates generated in $(pwd):"

ls -1

The script above generates 5 files: ca.pem, server-cert.pem, server-key.pem, client-cert.pem, and client-key.pem. The first three files are used by the BuildKit daemon, while the last two are used by the client to authenticate itself to the daemon. We copy them into the Kubernetes Secret named buildkit-tls following this structure:

1

2

3

4

5

6

7

8

9

10

11

apiVersion: v1

data:

ca.pem: <ca.pem file content base64 encoded>

cert.pem: <server-cert.pem file content base64 encoded>

key.pem: <server-key.pem file content base64 encoded>

kind: Secret

metadata:

name: buildkit-tls

namespace: buildx

type: Opaque

Persistent storage

Third, we create a PersistentVolumeClaim to provide persistent storage for the BuildKit daemon. This ensures that the build cache and intermediate files are preserved across restarts of the BuildKit pod. Notice that we mount the volume at /home/user/.local/share/buildkit, which is the default location where BuildKit stores its data in rootless mode. Thus, cache mounts created with --mount=type=cache will be stored persistently in that volume and reused across builds.

Here we define the PersistentVolumeClaim:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

labels:

app: buildkit-agent

argocd.argoproj.io/instance: buildkit

name: chatlayer-buildkit-buildkit-agent

namespace: buildx

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

storageClassName: standard-rwo

volumeMode: Filesystem

status:

accessModes:

- ReadWriteOnce

capacity:

storage: 50Gi

phase: Bound

Garbage collection

Finally, we should also consider setting up a garbage collection mechanism to clean up old and unused cache data from the persistent volume. Notice that we have set another volume mount at /home/user/.config/buildkit, which is where BuildKit looks for its configuration file. We can create a ConfigMap with a buildkitd.toml file that defines the garbage collection settings, such as the maximum cache size and the frequency of cleanup. Here is an example configuration:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

root = "/home/user/.local/share/buildkit"

[worker]

[worker.containerd]

enabled = false

[worker.oci]

enabled = true

gc = true

gckeepstorage = "32GB"

snapshotter = "overlayfs"

[[worker.oci.gcpolicy]]

filters = ["type==source.local","type==exec.cachemount","type==source.git.checkout"]

keepBytes = "16GB"

keepDuration = 604800 # 7 days (seconds)

# Everything else: hard cap

[[worker.oci.gcpolicy]]

all = true

keepBytes = "32GB"

We configure BuildKit to keep up to 32GB of cache data, with specific policies for different types of cache mounts. This helps us manage the storage usage and ensure that the BuildKit daemon does not run out of space over time.

The CI/CD pipeline configuration

Apart from the client certificates, we just need the external IP address of the LoadBalancer service to connect to the remote BuildKit daemon from our CI pipelines. We have saved those four values as CI/CD variables/secrets in our GitLab project so we can run something like the snippet below:

1

2

3

4

5

6

7

8

9

10

11

12

echo "Starting remote buildx builder ..."

mkdir -p ~/.docker/buildx/certs

echo "$BUILDKIT_CA_CERT_B64" | base64 -d > ~/.docker/buildx/certs/ca.pem

echo "$BUILDKIT_CLIENT_CERT_B64" | base64 -d > ~/.docker/buildx/certs/cert.pem

echo "$BUILDKIT_CLIENT_KEY_B64" | base64 -d > ~/.docker/buildx/certs/key.pem

docker buildx create --driver remote tcp://${BUILDKIT_HOST}:1234 \

--driver-opt cacert=$HOME/.docker/buildx/certs/ca.pem \

--driver-opt cert=$HOME/.docker/buildx/certs/cert.pem \

--driver-opt key=$HOME/.docker/buildx/certs/key.pem \

--use

Now, any subsequent docker buildx build commands in the CI job will use the remote BuildKit daemon for building images. Our remote builder is a simple k8s deployment of one single replica of BuildKit daemon using 8Gi of RAM and 3 CPU cores, which is more than enough for our use case.

Results

For our Go services, we use the following cache mount in the Dockerfile to speed up go mod download.

This was added even before we set up the remote BuildKit daemon, but it has no effect due to the ephemeral nature of the CI runners.

1

2

3

4

5

6

# Download dependencies

RUN --mount=type=cache,target=/go/pkg/mod \

echo "=== Go mod cache ===" && \

du -sh /go/pkg/mod || echo "Empty cache" && \

cd /go/src/services/app && go mod download -x

The very first time we run the Dockerfile above, we see an empty cache and the build takes around 2 minutes to complete. However, on subsequent builds, the cache is reused and the size is about a few hundred megabytes, and the build time drops to just 12 seconds!

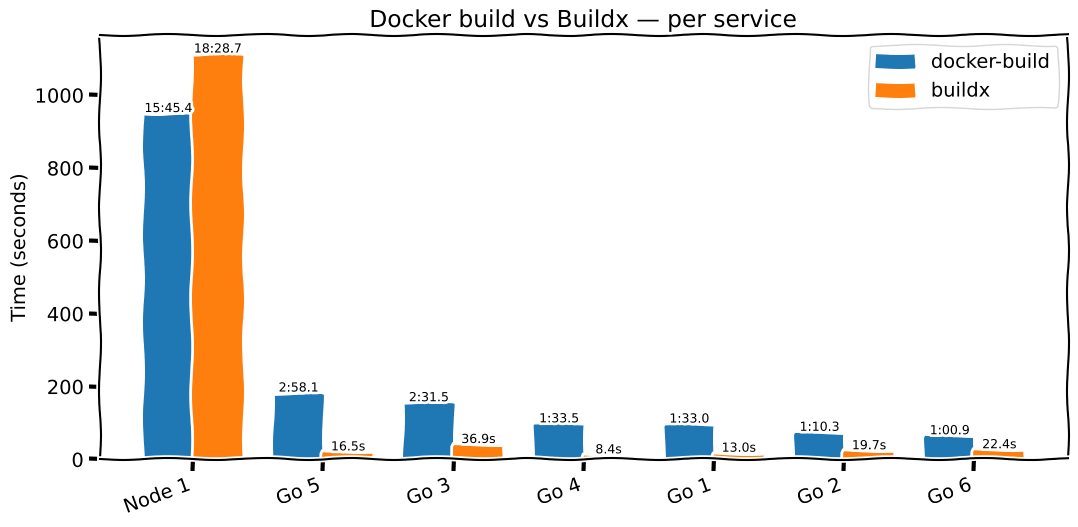

The image below shows the time comparison between our previous docker build and the new docker buildx build using the remote BuildKit daemon with persistent caching.

In the chart above, each service shows two bars: blue for the traditional docker build path (running inside ephemeral CI runners with no persistent cache), and orange for the same build performed with docker buildx build against our remote BuildKit daemon with a persistent cache.

Across our Go services, the improvement is dramatic. For 6 out of 7 services, build time dropped from approximately 2 minutes down to 10–17 seconds — a reduction of ~90–95%. This aligns with the expected benefit of retaining dependency and module caches across builds; once warmed, the BuildKit daemon reuses these layers instead of re-downloading or recompiling them, even though the CI runners themselves are ephemeral.

However, the Node.js service showed the opposite behavior: the build time increased by ~3 minutes. After investigation, the cause was the --load flag, which imports the built image back into the local Docker daemon after the build completes. This step requires exporting the entire image over the network, layer by layer. For this particular service, the image was large, and despite the remote builder and CI runners being in the same region, the transfer dominated the build time.

At the moment, we still rely on --load because downstream steps need access to the built image locally for validation. However, a cleaner approach is available: run the remote builder within the same network as the registry or deployment environment and switch to --push:

1

2

3

docker buildx build \

--push \

...

This pushes the image directly to the container registry without exporting layers back to the CI runner, eliminating the network transfer bottleneck entirely. Once builds push directly into your registry, any test or deployment workflows that need the image can simply pull it from there instead of receiving it via –load.

Conclusion

Using a remote BuildKit daemon with persistent caching provides significant and repeatable performance improvements for dependency-heavy builds. The improvement is not theoretical: cold builds become warm builds, and minutes become seconds.

Cache mounts are the real accelerator in modern Docker builds. But they only work if the builder persists. When the builder is ephemeral, the cache is ephemeral too.

One important remark: this solution does not require Kubernetes. While this post demonstrates a BuildKit daemon running in a Kubernetes cluster backed by a Persistent Volume Claim, the same setup can run on a VM, on a $5/month cloud instance, or even on your laptop. The only requirement is that the CI runner can reach the BuildKit daemon over a secure channel. This can be achieved using Cloudflare Tunnel, Tailscale / WireGuard, or a simple testing setup with ngrok.